Supercomputers drive today’s biggest leaps in AI and science, and the NVIDIA DGX H100 is at the center of it all. Most people focus on the wild price tag, but a single DGX H100 system can run up to $460,000. That sounds staggering until you realize this machine is unlocking breakthroughs in medicine, genomics, and large language models that were impossible just a year ago.

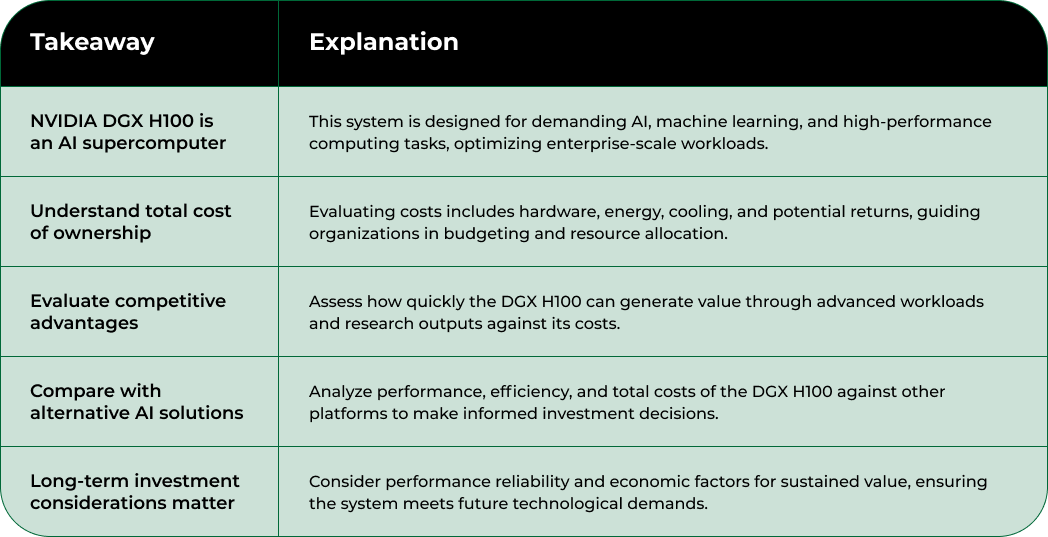

The NVIDIA DGX H100 represents a cutting-edge AI supercomputer designed to address the most demanding computational challenges in artificial intelligence, machine learning, and high-performance computing. As the pinnacle of NVIDIA’s enterprise-grade AI infrastructure solutions, this system delivers unprecedented computational capabilities for organizations pushing the boundaries of AI research and development.

At its core, the NVIDIA DGX H100 is a specialized server system engineered to accelerate complex AI workloads. Unlike traditional computing infrastructure, this system integrates multiple NVIDIA H100 Tensor Core GPUs, creating a powerful computational environment optimized for AI training, inference, and data analytics. Learn more about high-performance computing infrastructure that supports advanced technological development.

Key characteristics of the NVIDIA DGX H100 include:

The NVIDIA DGX H100 distinguishes itself through extraordinary technical specifications. According to research from the University of Strathclyde’s CMAC centre, these systems can process immense datasets and train complex AI models with remarkable efficiency.

Each system typically includes multiple H100 GPUs with substantial memory configurations, enabling organizations to tackle computational challenges that were previously impossible.

Primary applications for the NVIDIA DGX H100 span multiple domains, including:

By providing a comprehensive, integrated solution for AI computational needs, the NVIDIA DGX H100 represents more than just hardware. It is a transformative platform enabling breakthrough innovations across scientific, medical, and technological domains.

Navigating the complex landscape of high-performance computing requires more than technological understanding. For enterprises and research institutions, comprehending the economic implications of advanced AI infrastructure like the NVIDIA DGX H100 is crucial for strategic decision making and resource allocation. Explore AI computing infrastructure trends to gain deeper insights into technological investments.

The NVIDIA DGX H100 represents a significant financial investment that goes far beyond its initial purchase price. According to Silicon Data’s GPU rental price index, understanding the total cost of ownership involves analyzing multiple economic factors. These include not just hardware costs, but also energy consumption, cooling requirements, maintenance expenses, and potential computational returns.

Key financial considerations include:

For organizations contemplating AI infrastructure investments, the NVIDIA DGX H100 demands comprehensive cost-benefit analysis. The system’s extraordinary computational capabilities must be weighed against its substantial financial requirements. Enterprises must evaluate how quickly the system can generate value through advanced AI workloads, research capabilities, and potential commercial applications.

Critical evaluation metrics for assessing the DGX H100’s value include:

Understanding the nuanced economic landscape of high-performance computing systems like the NVIDIA DGX H100 is not just about technological prowess. It represents a strategic approach to evaluating transformative technological investments that can fundamentally reshape an organization’s computational capabilities and competitive positioning.

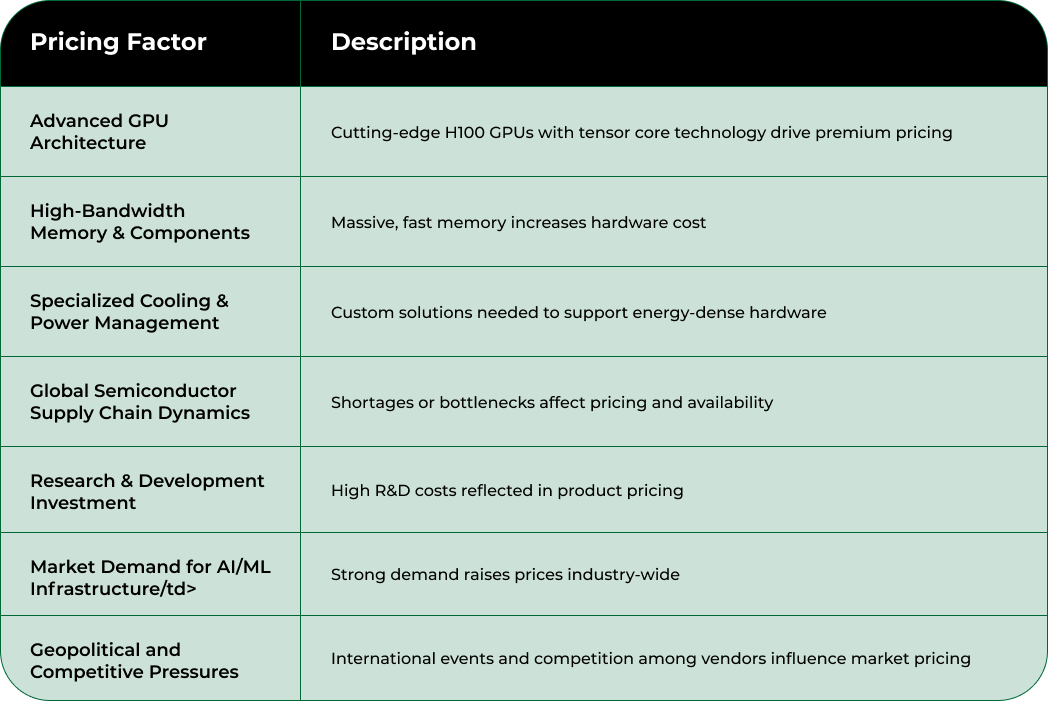

The pricing of the NVIDIA DGX H100 is a complex ecosystem involving multiple technical, market, and strategic factors that extend far beyond simple hardware costs. Learn more about our comprehensive computing infrastructure solutions to understand the intricate pricing dynamics of advanced AI systems.

The core technological components significantly drive the NVIDIA DGX H100’s pricing structure. According to GPU pricing analysis, a single DGX H100 system with 8 H100 GPUs can be priced around $460,000, including essential support services. The extraordinary computational capabilities of these GPUs, characterized by their advanced tensor core technology and massive memory bandwidth, directly contribute to the system’s substantial cost.

Key technological pricing factors include:

Beyond raw technological specifications, market dynamics play a crucial role in determining the NVIDIA DGX H100’s pricing. Factors such as global semiconductor supply chains, research and development investments, and the current demand for high-performance computing infrastructure create a complex pricing landscape.

Critical market influences on pricing include:

The NVIDIA DGX H100’s pricing reflects not just a hardware purchase, but an investment in cutting-edge computational infrastructure designed to solve the most complex technological challenges across scientific, industrial, and research domains.

The table below organizes the primary factors influencing the price of the Nvidia DGX H100, clearly indicating how both technical and market considerations impact overall cost.

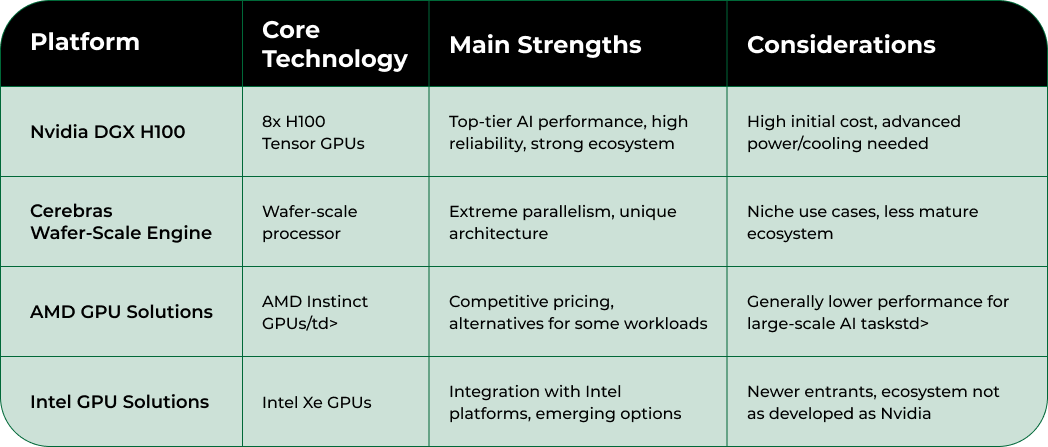

Comparing the NVIDIA DGX H100 with alternative high-performance computing solutions requires a comprehensive analysis that extends beyond initial purchase price. Explore flexible financing options for advanced computing infrastructure to understand the total cost of ownership for different AI computing platforms.

The evaluation of computational alternatives demands a nuanced approach that considers multiple dimensions of performance and cost-effectiveness. According to comparative research on machine learning systems, the NVIDIA DGX H100 stands out not just for its raw computational power, but for its efficiency in handling complex AI workloads.

Key comparative evaluation criteria include:

Competitive alternatives to the NVIDIA DGX H100 present varied technological approaches. Emerging technologies like Cerebras’ wafer-scale engine (WSE) and other GPU-based solutions from manufacturers such as AMD and Intel offer different computational paradigms. However, the NVIDIA DGX H100 typically demonstrates superior performance in large-scale AI and machine learning applications.

Critical considerations when comparing alternatives:

Ultimately, selecting the right AI computing infrastructure requires a holistic assessment that balances technological capabilities, financial constraints, and specific organizational computational requirements.

To help readers compare leading AI computing solutions, the following table summarizes key differences between the Nvidia DGX H100 and notable alternatives as discussed in the article.

The strategic decision to invest in the NVIDIA DGX H100 requires a sophisticated evaluation framework that transcends traditional hardware procurement models. Explore our comprehensive infrastructure investment strategies to understand the nuanced approach to high-performance computing investments.

Long-term value assessment begins with understanding the system’s computational reliability and sustained performance. According to research analyzing GPU system resilience, the NVIDIA DGX H100 demonstrates remarkable energy efficiency and consistent performance across complex workloads. Empirical measurements reveal that these systems can operate at approximately 18% lower power consumption than manufacturer ratings, while maintaining exceptional computational capabilities.

Key long-term performance indicators include:

Beyond raw computational power, the NVIDIA DGX H100 represents a strategic technological investment. Organizations must consider multiple economic dimensions, including potential research output, computational revenue generation, and the system’s ability to tackle increasingly complex AI and machine learning challenges.

Critical long-term investment evaluation factors:

Investing in the NVIDIA DGX H100 is not merely a hardware purchase but a commitment to cutting-edge computational infrastructure that can potentially transform an organization’s technological capabilities and research potential.

Choosing the right AI infrastructure is about more than specs and price tags. The article showed just how quickly costs and complexity can spiral with systems like the Nvidia DGX H100. Most teams struggle to balance advanced GPU performance, long-term value, and total cost of ownership while scaling up. This is where Nodestream becomes the solution for your high-stakes computing challenges. Our platform directly addresses those pain points. We provide instant access to enterprise-grade GPU servers and AI-ready systems, all on a marketplace built for secure, transparent transactions.

Why keep wrestling with uncertainty or outdated hardware while your projects fall behind? Visit Nodestream – your marketplace for high-performance AI and HPC infrastructure. Browse real-time listings and unlock fast procurement, verified sourcing, and expert support for all your AI ambitions. Your next step toward reliable, enterprise-scale compute is just one click away. Start solving your toughest digital challenges with our solutions for buying, selling, and scaling HPC assets today. Act now to stay ahead of the competition and bring clarity to your infrastructure decisions.

The Nvidia DGX H100 integrates multiple H100 Tensor Core GPUs in a specialized server system, designed to accelerate complex AI workloads for enterprise-scale machine learning and high-performance computing applications.

The Nvidia DGX H100 typically exhibits superior performance in handling large-scale AI and machine learning tasks compared to alternatives like Cerebras’ wafer-scale engine and GPU solutions from AMD and Intel, despite its higher price point.

The pricing of the Nvidia DGX H100 is influenced by factors such as the core technological components, including advanced GPU architecture and memory configurations, as well as market dynamics like global supply chain conditions and R&D investments.

Understanding the total cost of ownership is crucial for organizations as it encompasses not just the initial acquisition cost, but also ongoing expenses like power consumption, cooling requirements, maintenance, and potential revenue generated from advanced AI workloads.