Machine learning once demanded supercomputers and weeks of patient waiting just to process a single model. Now, thanks to GPUs, researchers can slash training times by up to 90 percent, opening doors for experimentation and rapid breakthroughs. Most people think GPUs just speed up gaming, but in reality, they are the engine that powers the latest advances in healthcare, finance, and even self-driving cars.

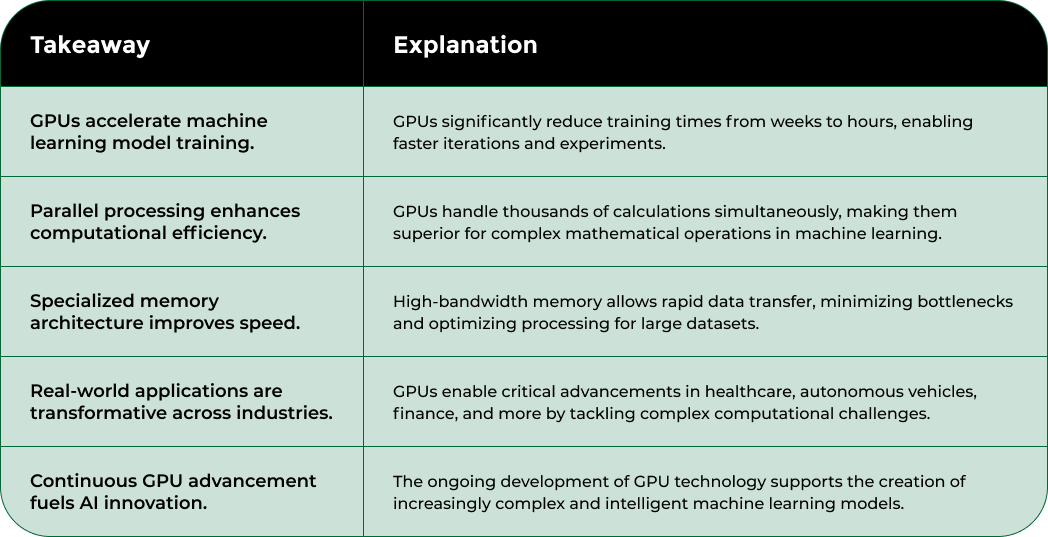

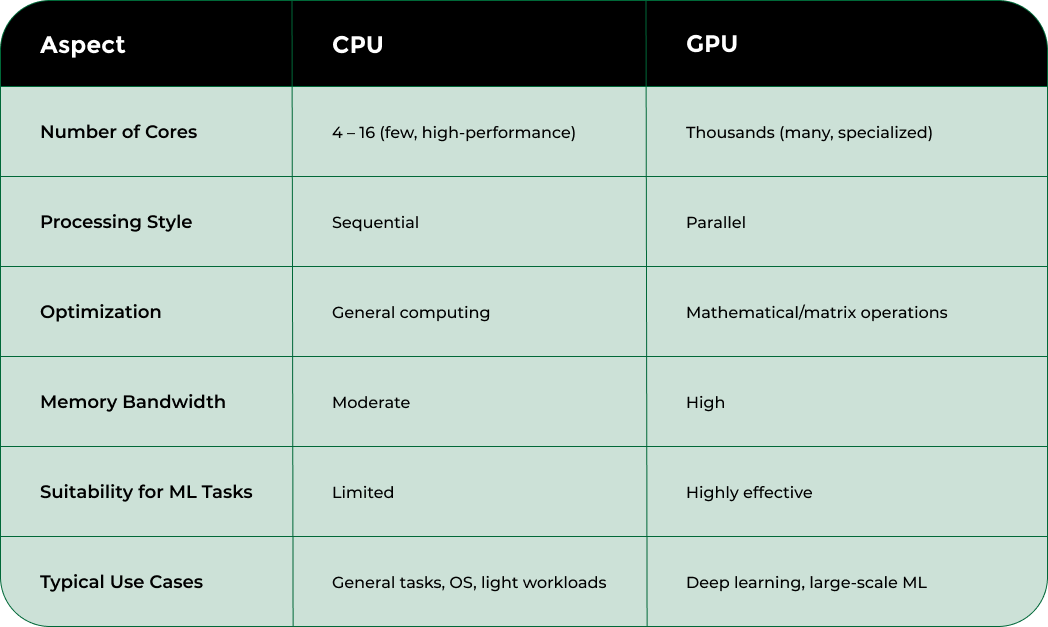

Graphics Processing Units (GPUs) have transformed machine learning from a computationally intensive challenge to an accessible and powerful technology. Unlike traditional Central Processing Units (CPUs), GPUs are specifically designed to handle massive parallel computational tasks, making them ideal for complex machine learning algorithms.

GPUs possess a unique architectural advantage that sets them apart in machine learning processing. Where CPUs might have 4 to 16 cores optimized for sequential processing, modern GPUs can contain thousands of smaller, more specialized cores capable of executing multiple calculations simultaneously. NVIDIA Research demonstrates that this parallel processing capability allows machine learning models to train exponentially faster compared to traditional computing methods.

The core strength of GPUs in machine learning lies in their ability to perform numerous mathematical operations in parallel. Neural networks, particularly deep learning models, require immense matrix multiplication and vector calculations. GPUs excel at these tasks by breaking down complex computational problems into smaller, manageable chunks that can be processed concurrently.

Machine learning workflows demand extraordinary computational power, especially during model training phases. GPUs provide this power through several key mechanisms:

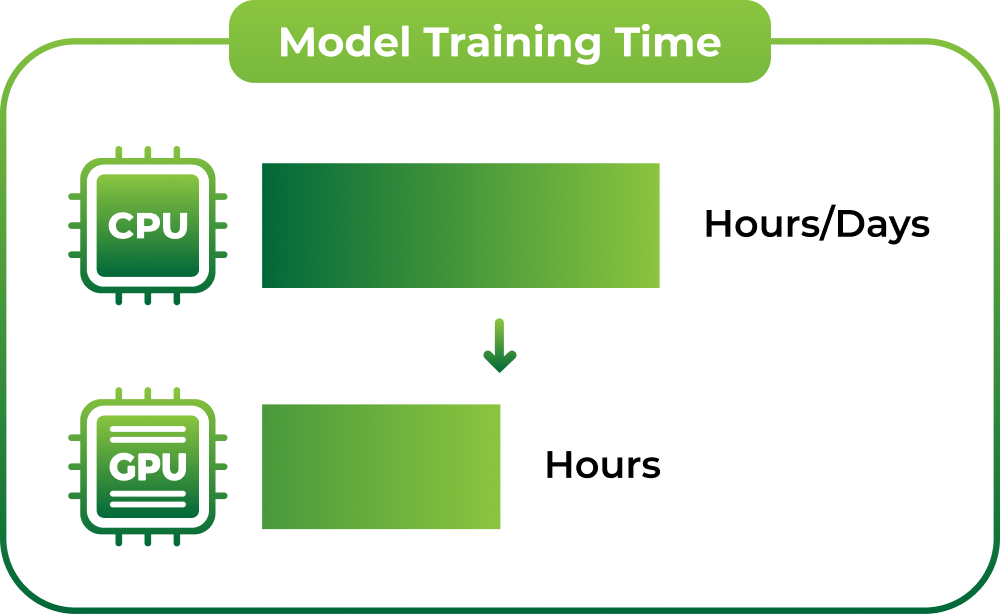

Research indicates that using specialized machine learning GPUs can reduce training times from weeks to hours, enabling researchers and data scientists to iterate and experiment more rapidly.

The computational efficiency of GPUs has become so critical that they are now considered essential infrastructure for advanced artificial intelligence research and development.

By transforming complex mathematical computations into streamlined, parallel processes, GPUs have become the backbone of modern machine learning technologies, driving innovations across industries from healthcare to autonomous vehicle development.

Below is a comparison of CPUs and GPUs in the context of machine learning, summarizing their key architectural and performance differences for readers.

Machine learning model training represents one of the most computationally demanding processes in modern artificial intelligence, and Graphics Processing Units (GPUs) have emerged as the critical technology enabling breakthrough performance. These specialized processors transform complex mathematical computations into rapid, parallel processing capabilities that dramatically accelerate neural network development.

Training machine learning models involves processing massive datasets through intricate neural network architectures that require millions of mathematical calculations per second. Traditional CPUs struggle with these requirements, whereas GPUs can execute thousands of calculations simultaneously. MIT Technology Review highlights that modern deep learning models demand computational resources exponentially greater than traditional computing approaches.

Neural networks depend on matrix multiplication and tensor operations, which GPUs handle with unprecedented efficiency. By distributing computational tasks across numerous specialized cores, GPUs can process complex mathematical transformations in parallel, reducing training times from weeks to mere hours. This acceleration enables data scientists and researchers to iterate rapidly, experiment more extensively, and develop increasingly sophisticated machine learning models.

The performance gains from utilizing GPUs in machine learning are substantial and measurable:

Advanced machine learning models in fields like computer vision, natural language processing, and predictive analytics rely heavily on these computational advantages. Learn more about cutting-edge HPC infrastructure that supports these sophisticated computational requirements. The continuous evolution of GPUs ensures that machine learning researchers can push the boundaries of artificial intelligence, developing increasingly complex and intelligent systems that were previously considered impossible.

Artificial Intelligence (AI) demands extraordinary computational power, and Graphics Processing Units (GPUs) have become the fundamental technology enabling unprecedented efficiency in complex computational tasks. The unique architectural design of GPUs transforms traditional computing limitations, providing a revolutionary approach to processing massive datasets and executing intricate machine learning algorithms.

Unlike traditional Central Processing Units (CPUs), GPUs are specifically engineered to handle thousands of computational tasks simultaneously. This parallel processing capability fundamentally changes how complex mathematical operations are executed. Stanford University Research demonstrates that GPUs can perform matrix multiplications and tensor operations exponentially faster than conventional processors, making them critical for advanced AI model training.

The specialized architecture of GPUs includes thousands of smaller, more focused cores designed to work concurrently. This design allows for unprecedented computational density, where complex problems are broken down into smaller, manageable computational units that can be processed in parallel. Such an approach dramatically reduces the time required for machine learning model training, transforming weeks-long processes into hours or even minutes.

The computational efficiency of GPUs in AI applications can be quantified through several key performance indicators:

Explore cutting-edge AI computing infrastructure that leverages these advanced computational techniques. The continuous evolution of GPUs ensures that AI researchers and developers can tackle increasingly complex computational challenges, pushing the boundaries of what is possible in artificial intelligence, machine learning, and data science.

Effective machine learning requires sophisticated computational infrastructure, with GPUs serving as the critical foundation for advanced AI model development. The strategic selection of GPUs involves understanding their specialized features that directly impact computational performance, efficiency, and model training capabilities.

Memory performance represents a fundamental differentiator in machine learning GPUs. High-bandwidth memory (HBM) technologies enable rapid data transfer between processing cores, dramatically reducing computational bottlenecks. IEEE Computer Society Research indicates that modern machine learning GPUs can achieve memory bandwidth exceeding 2 terabytes per second, enabling complex neural network training with unprecedented speed and efficiency.

The memory architecture of GPUs determines how quickly large datasets can be processed and how efficiently machine learning models can access and manipulate training information. Specialized memory designs with increased bandwidth and reduced latency provide machine learning researchers with the computational flexibility required for complex algorithmic training.

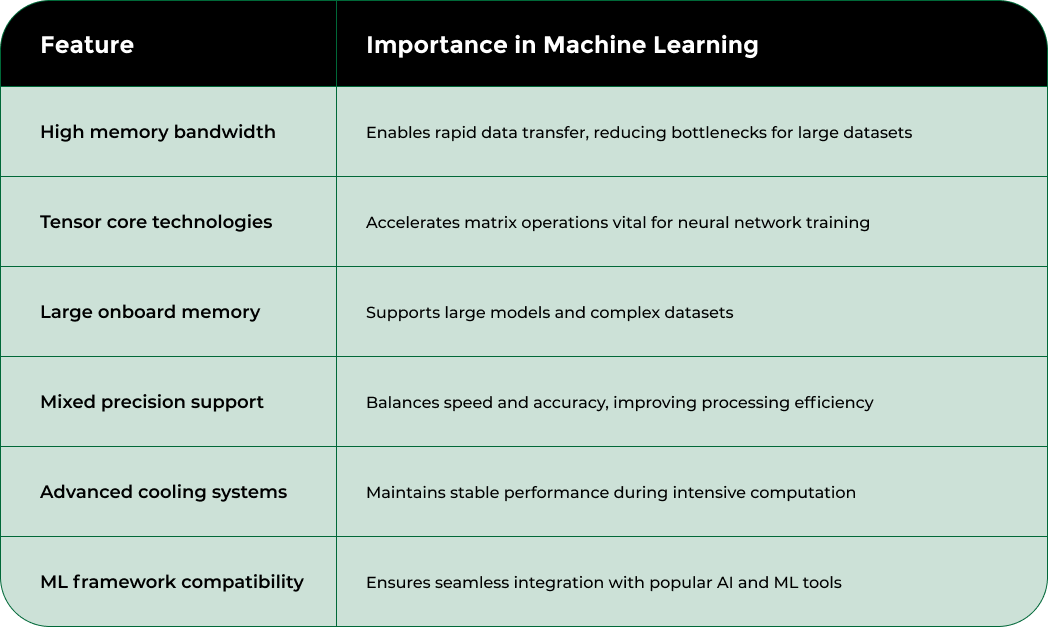

Here is a summary of key GPU features and their importance for effective machine learning, helping you identify the attributes that matter most in GPU selection.

Machine learning GPUs distinguish themselves through several critical performance characteristics:

Explore advanced AI computing solutions that leverage these sophisticated computational architectures. The continuous evolution of GPUs ensures that machine learning practitioners can develop increasingly complex models with greater computational efficiency, pushing the boundaries of artificial intelligence research and application development.

Graphics Processing Units (GPUs) have transcended their original graphics rendering purpose to become fundamental technologies driving innovation across numerous industries. Machine learning applications leverage GPUs to solve complex computational challenges that were previously considered impossible, transforming sectors from healthcare to autonomous transportation.

In medical imaging and diagnostics, GPUs enable breakthrough computational capabilities. National Institutes of Health Research demonstrates how deep learning algorithms powered by GPUs can analyze medical images with unprecedented accuracy, detecting subtle pathological patterns that human radiologists might overlook. These computational techniques dramatically reduce diagnostic times and improve early disease detection across multiple medical disciplines.

Advanced machine learning models trained on massive medical datasets can now identify potential cancer indicators, predict genetic disorders, and support personalized treatment strategies. The parallel processing capabilities of GPUs make these complex computational tasks feasible, essentially transforming how medical professionals approach diagnostic challenges.

Machine learning powered by GPUs drives innovation across multiple critical domains:

Discover cutting-edge AI computing infrastructure that supports these transformative technologies. The continuous advancement of GPUs ensures that machine learning applications will continue pushing technological boundaries, solving increasingly complex computational challenges across global industries.

If your team is struggling with long model training times, scaling complex neural networks, or simply managing the high demand for computational efficiency discussed in this article, you are not alone. As you have seen, the right GPU infrastructure is critical for staying competitive and advancing your AI initiatives. Modern projects demand solutions that remove bottlenecks, speed up iteration, and support both current and future workload requirements.

Take control today with Nodestream by Blockware Solutions. Our powerful marketplace offers you direct access to real-time GPU inventory, transparent bulk ordering, and fully managed deployment support tailored for machine learning and advanced AI needs. Whether you are looking to upgrade your model training setup, add scalable GPU servers, or monetize surplus equipment, we deliver enterprise-grade resources and support every step of the way. Explore our HPC and AI-ready solutions now or learn more about cutting-edge AI computing infrastructure. Rethink what’s possible and give your team the speed it deserves before your competitors do.

GPUs, or Graphics Processing Units, are essential in machine learning as they handle massive parallel computational tasks, allowing complex models to train significantly faster than traditional CPUs.

GPUs can execute thousands of calculations simultaneously, reducing model training times from weeks to hours or even minutes, enabling researchers to iterate and experiment more rapidly.

Key features to consider include high memory bandwidth, specialized tensor cores for matrix operations, large onboard memory capacity, and support for popular machine learning frameworks.

Parallel processing allows GPUs to handle multiple computations at once, which is crucial for processing large datasets and executing complex mathematical operations required in machine learning and AI applications.