GPU servers are completely changing how big data gets crunched. A single GPU can have over 4000 processing cores, compared to just 4 to 64 in a traditional server. Most people think this much power is only for gamers or graphic designers. The real surprise is that these machines now drive everything from climate change research to stock market algorithms because they make previously impossible workloads actually possible.

GPU servers represent specialized computing systems designed to leverage graphics processing units (GPUs) for high-performance computational tasks beyond traditional graphics rendering. Unlike standard servers, these powerful machines are engineered to handle massive parallel processing requirements for advanced workloads in artificial intelligence, machine learning, scientific research, and complex data analysis.

A GPU server’s architecture fundamentally differs from standard server configurations by prioritizing parallel processing capabilities. These systems integrate multiple high-performance GPUs, each capable of executing thousands of computational threads simultaneously. Key infrastructure components typically include:

The computational density of GPU servers enables organizations to process complex algorithms exponentially faster compared to traditional central processing unit (CPU) based systems. This architectural approach transforms how computational challenges are addressed across multiple domains.

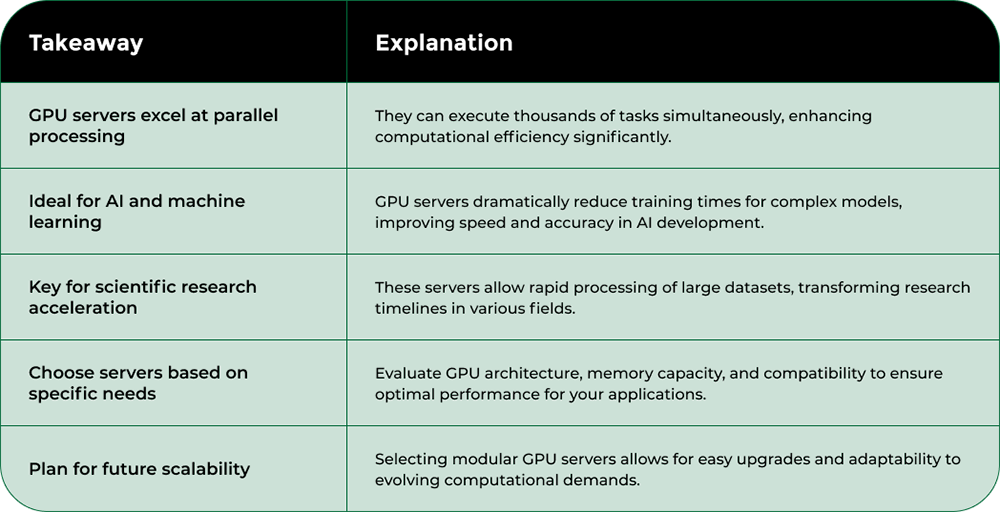

GPU servers excel in scenarios requiring massive parallel computation. Whether processing complex machine learning models, rendering high-resolution graphics, or conducting scientific simulations, these systems provide unparalleled computational efficiency. The ability to distribute workloads across multiple GPU cores allows for near-linear performance scaling, making them essential for research institutions, AI development teams, and enterprises managing data-intensive applications.

For organizations seeking advanced computational capabilities, exploring enterprise-grade GPU infrastructure solutions can unlock transformative potential in computational performance and research capabilities.

GPU servers have emerged as critical technological infrastructure transforming computational capabilities across multiple industries. By enabling unprecedented processing speeds and parallel computing potential, these systems are redefining how complex computational challenges are solved in scientific research, artificial intelligence, and advanced technological domains.

In scientific domains, GPU servers provide transformative computational power that dramatically reduces research timelines. Advanced computational infrastructure allows researchers to process massive datasets and conduct sophisticated simulations that were previously impractical or impossible. Key research areas benefiting from GPU server technologies include:

The ability to process massive computational tasks in significantly reduced timeframes represents a quantum leap in research methodology, enabling scientists to explore increasingly complex hypothetical scenarios with unprecedented efficiency.

Beyond research applications, GPU servers are driving economic innovation across multiple sectors. Financial institutions leverage these systems for advanced risk modeling, algorithmic trading, and real-time market analysis. Machine learning and artificial intelligence companies depend on GPU infrastructure to train complex neural networks and develop increasingly sophisticated algorithms.

Explore our comprehensive guide on high-performance computing infrastructure to understand how GPU servers are reshaping technological capabilities across industries. These powerful computing systems are not merely technological tools but fundamental drivers of innovation, enabling breakthroughs that were unimaginable just a decade ago.

GPU servers represent a revolutionary computational infrastructure that fundamentally transforms artificial intelligence and machine learning capabilities. By providing unprecedented parallel processing power, these systems enable complex algorithms to execute exponentially faster than traditional computing architectures, dramatically accelerating research and development across multiple technological domains.

Advanced computational research demonstrates that GPU servers provide critical advantages in machine learning model training. Traditional CPU-based systems struggle with the massive computational demands of deep learning algorithms, while GPU servers can process thousands of computational threads simultaneously. Key advantages include:

The parallel architecture of GPU servers allows machine learning engineers to experiment with more complex model architectures and train them significantly faster, accelerating technological innovation.

In artificial intelligence research, GPU servers enable transformative approaches to algorithm development. By supporting massive parallel computations, these systems allow researchers to explore increasingly sophisticated neural network designs that were previously computationally prohibitive.

Explore our comprehensive guide on AI computing infrastructure to understand how GPU servers are revolutionizing technological capabilities. These powerful systems are not just improving computational speed but fundamentally reshaping how complex AI challenges are approached and solved across industries.

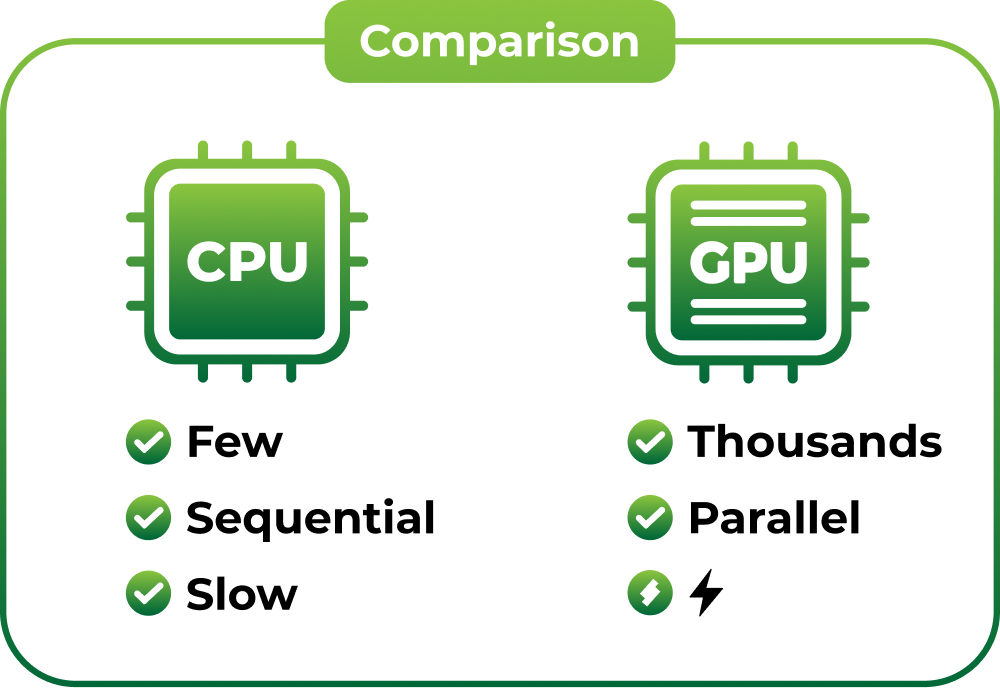

Traditional servers and GPU servers represent fundamentally different computational architectures designed to address distinct technological challenges. While standard servers rely primarily on central processing units (CPUs) for computation, GPU servers leverage specialized graphics processing units to deliver unprecedented parallel computing capabilities across complex computational tasks.

Research from computational engineering experts highlights critical structural variations between traditional and GPU servers. Traditional servers typically feature a limited number of CPU cores optimized for sequential processing, whereas GPU servers integrate multiple high-performance GPUs capable of executing thousands of computational threads simultaneously. Key architectural distinctions include:

The fundamental design philosophy differs dramatically: traditional servers excel at linear, structured computational tasks, while GPU servers are engineered for massively parallel processing across complex, data-intensive workloads.

GPU servers demonstrate dramatically superior performance in scenarios requiring simultaneous processing of multiple computational threads. Scientific simulations, machine learning model training, and advanced graphics rendering represent domains where GPU servers outperform traditional server architectures by orders of magnitude. The parallel processing capabilities allow for exponential increases in computational speed and efficiency, transforming how complex computational challenges are addressed across industries.

Learn more about enterprise computing infrastructure solutions to understand how these technological innovations are reshaping computational capabilities. The evolution from traditional to GPU-accelerated servers represents a quantum leap in computing performance, enabling technological breakthroughs previously considered impossible.

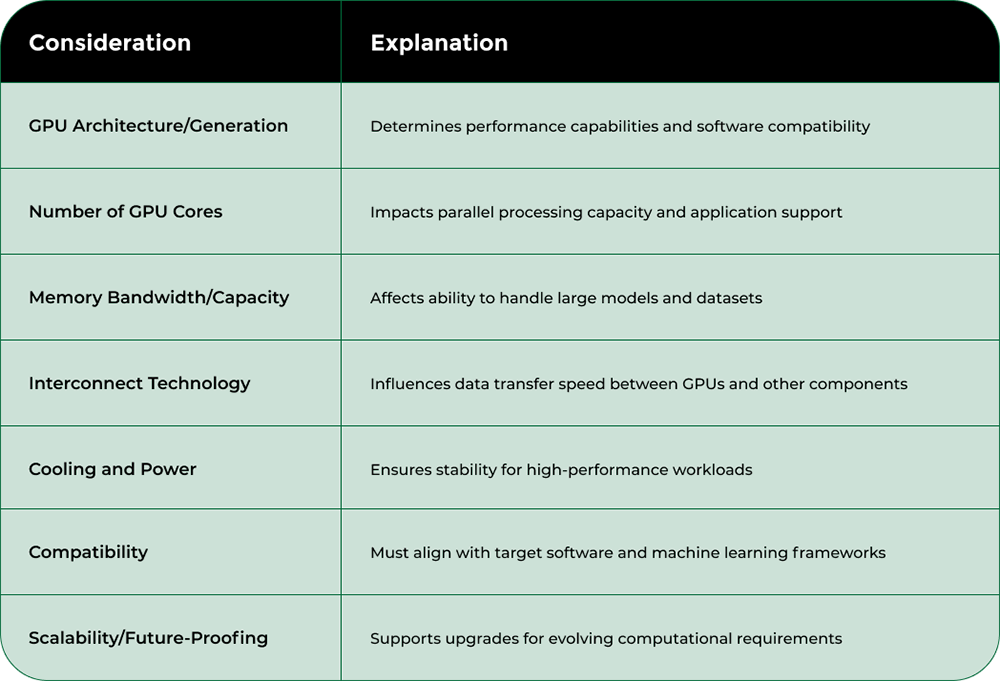

Selecting an appropriate GPU server requires careful evaluation of complex technical requirements and organizational computational needs. The decision involves analyzing multiple interconnected factors that directly impact computational performance, scalability, and long-term operational efficiency.

Enterprise computing research emphasizes the critical importance of matching server specifications with specific computational workloads. Organizations must thoroughly assess technical parameters that determine overall system capabilities. Essential technical considerations include:

Comprehensive technical assessment ensures that selected GPU servers can effectively handle anticipated computational demands while providing optimal performance and energy efficiency.

Beyond immediate performance metrics, organizations must consider the long-term scalability and adaptability of their GPU server infrastructure. Selecting servers with modular designs and upgrade potential allows businesses to incrementally expand computational capabilities without complete system replacement. Factors like support for future GPU generations, expandable memory slots, and flexible interconnect technologies become crucial strategic considerations.

Explore enterprise-grade computing infrastructure solutions to understand how advanced server selection strategies can transform organizational computational capabilities. Intelligent GPU server selection represents a strategic investment in technological innovation and computational performance.

This table summarizes the key technical considerations organizations should evaluate when selecting a GPU server to ensure optimal performance and future scalability.

Struggling with the limitations of traditional servers when tackling large-scale AI, machine learning, or data-intensive workloads? As highlighted in this article, GPU servers provide the parallel computing power and advanced architecture needed to transform research, scale deployments, and achieve faster model training. But finding transparent, reliable, and scalable infrastructure can still be a major obstacle for teams aiming to stay ahead.

Take the next step by choosing a marketplace built for performance and confidence. On https://nodestream.blockwaresolutions.com you gain access to real-time inventory of enterprise-ready GPU servers, secure transactions, and expert support services from procurement to global deployment. Trust a platform designed for AI and HPC infrastructure so your organization can buy and sell the hardware you need—without costly delays or guesswork. Discover how Nodestream can simplify your next infrastructure upgrade or sale and ensure you have the compute power needed to accelerate innovation today.

A GPU server is a specialized computing system designed to use graphics processing units (GPUs) for high-performance tasks such as artificial intelligence, machine learning, scientific research, and data analysis, enabling massive parallel processing capabilities.

GPU servers utilize multiple high-performance GPUs to handle thousands of computational threads simultaneously, while traditional servers rely on central processing units (CPUs) optimized for fewer simultaneous tasks. This fundamental architectural difference results in superior performance for parallel processing tasks in GPU servers.

GPU servers are primarily used in areas requiring intense computational power, such as machine learning model training, scientific simulations, advanced graphics rendering, and complex data analysis in various industries including finance and healthcare.

When selecting a GPU server, consider factors such as GPU architecture, the number of GPU cores, memory bandwidth, interconnect technologies, thermal management, and compatibility with your specific software and frameworks to ensure optimal performance and scalability.